If you need to access an AWS resource from your environment (whether from your local machine or a CI/CD pipeline), you’ll need your permanent AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY. Your deployed application must also have and use similar credentials (though a temporal one) to access the resources required to start and run successfully. However, we can't (and... Continue Reading →

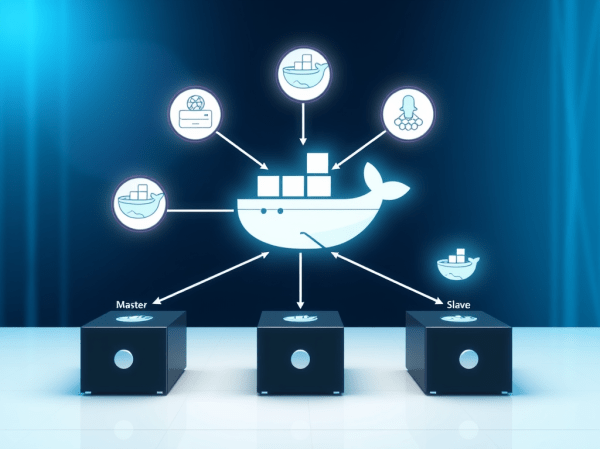

Container-based Virtualization of a Hadoop Cluster using Docker

Hadoop is a collection of open-source software utilities, a framework that supports the processing of large data sets in a distributed computing environment. Its core part consists of HDFS (Hadoop Distributed File System) which is used for storage, MapReduce which is used to define the data processing task and YARN which actually runs the data... Continue Reading →